In Microservices and container orchestrators world, all Containers are essentially ephemeral in nature, that means the are not suppose to last long and even if they lasted long, they are not suppose to retain or hold the data in their life cycles. Container orchestrators like Kubernetes replaces the crashed or exited container service with just another instance of it. Even if that’s how the containers are used, applications themselves needs to maintain it’s own state & preserve some kind of application data across the life cycles of the containers either in some databases or on the host file systems. That’s where managing the data in and out-side container comes into picture.

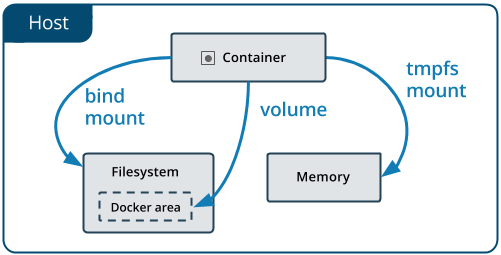

- Docker provides two options for containers to store files on the host machine, so that the data or files are persisted even after the container stops/exits: Volumes, and Bind mounts.

- Docker also supports containers storing files in-memory on the host machine.

- Such files are not persisted.

- If you’re running Docker on Linux, tmpfs mount is used to store files in the host’s system memory.

- If you’re running Docker on Windows, named pipe is used to store files in the host’s system memory.

Difference between Volumes, Bind mounts & tmpfs mounts:

Volumes:

- Stored in a part of the host filesystem which is managed by Docker (/var/lib/docker/volumes/ on Linux).

- A given volume can be mounted into multiple containers simultaneously.

- When no running container is using a volume, the volume is still available to Docker and is not removed automatically.

-

Non-Docker processes should not modify this part of the filesystem.

Non-Docker processes should not modify this part of the filesystem. -

Volumes are the best way to persist data in Docker.

Volumes are the best way to persist data in Docker. - Volumes also support the use of volume drivers, which allow you to store your data on remote hosts or cloud providers, among other possibilities.

- Volumes work on both Linux and Windows containers.

-

Use-cases:

-

Sharing data among multiple running containers. Multiple containers can mount the same volume simultaneously, either read-write or read-only.

Sharing data among multiple running containers. Multiple containers can mount the same volume simultaneously, either read-write or read-only. -

When you want to store your container’s data on a remote host or a cloud provider, rather than locally.

When you want to store your container’s data on a remote host or a cloud provider, rather than locally. -

When you need to back up, restore, or migrate data from one Docker host to another. You can stop containers using the volume, then back up the volume’s directory (such as /var/lib/docker/volumes/

When you need to back up, restore, or migrate data from one Docker host to another. You can stop containers using the volume, then back up the volume’s directory (such as /var/lib/docker/volumes/). -

When your application requires fully native file system behavior on Docker Desktop. For example, a database engine requires precise control over disk flushing to guarantee transaction durability.

When your application requires fully native file system behavior on Docker Desktop. For example, a database engine requires precise control over disk flushing to guarantee transaction durability.

-

Bind mounts:

- May be stored anywhere on the host system.

-

They may even be important system files or directories.

They may even be important system files or directories. -

Non-Docker processes on the Docker host or a Docker container can modify them at any time.

Non-Docker processes on the Docker host or a Docker container can modify them at any time. -

cautions:

cautions:

- One side effect of using bind mounts, for better or for worse, is that you can change the host filesystem via processes running in a container, including creating, modifying, or deleting important system files or directories. This is a powerful ability which can have security implications, including impacting non-Docker processes on the host system.

-

Use-cases:

-

Sharing configuration files from the host machine to containers. Docker provides DNS resolution to containers by default, by mounting /etc/resolv.conf from the host machine into each container.

Sharing configuration files from the host machine to containers. Docker provides DNS resolution to containers by default, by mounting /etc/resolv.conf from the host machine into each container. -

Sharing source code or build artifacts between a development environment on the Docker host and a container.

Sharing source code or build artifacts between a development environment on the Docker host and a container.

-

tmpfs mounts:

- Stored in the host system’s memory only, and are never written to the host system’s filesystem.

- It can be used by a container during the lifetime of the container, to store non-persistent state or sensitive information.

- When the container stops, the tmpfs mount is removed, and files written there won’t be persisted.

- For instance, internally, swarm services use tmpfs mounts to mount secrets into a service’s containers.

-

Limitations of tmpfs mounts:

- Unlike volumes and bind mounts, you can’t share tmpfs mounts between containers.

- This functionality is only available if you’re running Docker on Linux.

-

Use-cases:

-

When you do not want the data to persist either on the host machine or within the container. This may be for security reasons or to protect the performance of the container when your application needs to write a large volume of non-persistent state data.

When you do not want the data to persist either on the host machine or within the container. This may be for security reasons or to protect the performance of the container when your application needs to write a large volume of non-persistent state data. -

This is useful to temporarily store sensitive files that you don’t want to persist in either the host or the container writable layer.

This is useful to temporarily store sensitive files that you don’t want to persist in either the host or the container writable layer.

-

-

Use a tmpfs mount in a container:

- To use a tmpfs mount in a container, use the

--tmpfsflag, or use the--mountflag withtype=tmpfsanddestinationoptions. - with

--mount:docker run -d -it --name <container-name> --mount type=tmpfs,destination=/<path-in-container> <image>:latest-

Optional Flags:

- Can only be used with

--mount. -

tmpfs-size: Size of the tmpfs mount in bytes. Unlimited by default. -

tmpfs-mode: File mode of the tmpfs in octal. For instance,700or0770. Defaults to1777or world-writable.

- Can only be used with

-

Optional Flags:

- with

--tmpfs:docker run -d -it --name <container-name> --tmpfs /<path-in-container> <image>:latest

- To use a tmpfs mount in a container, use the

Create & Manage Volumes:

-

Create a volume:

docker volume create <name-of-volume> -

List volumes:

docker volume ls -

Inspect a volume:

docker volume inspect <name-of-volume> -

Remove a volume:

docker volume rm <name-of-volume>

--volume & --mount Docker flags difference:

-

--mountis more explicit and verbose.-vsyntax combines all the options together in one field, while the--mountsyntax separates them. -

If you need to specify volume driver options, you must use

If you need to specify volume driver options, you must use --mount. -

When using volumes with services, only

When using volumes with services, only --mountis supported. -

--mountconsists of multiple key-value pairs, separated by commas and each consisting of a<key>=<value>tuple.-

type: Can bebind,volume, ortmpfs. -

sourceorsrc: For named volumes, this is the name of the volume. For anonymous volumes, this field is omitted. -

destinationordstortarget: Takes as its value the path where the file or directory is mounted in the container. -

readonlyorro: If present, causes the bind mount to be mounted into the container as read-only.- At other times, the container only needs read access to the data. Remember that multiple containers can mount the same volume, and it can be mounted read-write for some of them and read-only for others, at the same time.

- by default,

rw.

-

volume-opt: Can be specified more than once, takes a key-value pair consisting of the option name and its value.

-

-

Examples: Create volume while Starting the container

- with

--mount:$ docker run -d --name <container-name> --mount source=<volume-name>,target=/<destination-path-in-container> <image>:latest - with

--volume:$ docker run -d --name <container-name> -v <volume-name>:/<destination-path-in-container> <image>:latest - Clean up the containers and volumes:

$ docker container stop devtest $ docker container rm devtest $ docker volume rm myvol2

- with

Using volume with Docker Compose:

- Using Docker Compose service with a volume:

- On the first invocation of docker-compose up the volume will be created. The same volume will be reused on following invocations.

services: frontend: image: node:lts volumes: - myapp:/home/node/app volumes: myapp: - Referencing the already created volume inside docker-compose.yml as follows:

services: frontend: image: node:lts volumes: - myapp:/home/node/app volumes: myapp: external: true - Use a

bind mountwith Docker Compose:version: "3.9" services: frontend: image: node:lts volumes: - type: bind source: ./static target: /opt/app/static volumes: myapp:

Volume drivers to share data among machines or cloud providers:

- When building fault-tolerant applications, you might need to configure multiple replicas of the same service to have access to the same files.

- There are several ways to achieve this when developing your applications. One is to add logic to your application to store files on a cloud object storage system like Amazon S3. Another is to create volumes with a driver that supports writing files to an external storage system like NFS or Amazon S3.

- Volume drivers allow you to abstract the underlying storage system from the application logic. For example, if your services use a volume with an NFS driver, you can update the services to use a different driver, as an example to store data in the cloud, without changing the application logic.

- Further Reading:

References:

PREVIOUSDocker Containers Essentials