Azure Video Analyzer on IoT Edge:

- extensible platform, enabling you to connect different video analysis edge modules.

- Can be used in conjunction with computer vision SDKs and toolkits

Video Analyzer Pipeline:

- Provides a way to create Video Pipeline.

- A pipeline is where media is actually processed. Pipelines can be associated with individual cameras.

Pipeline Sources:

- RTSP source:

- An RTSP source node enables you to capture media from a RTSP server.

- The RTSP source node in a pipeline act as a client and can establish a session with an RTSP server.

- Many devices such as most IP cameras have a built-in RTSP server.

- ONVIF (Open Network Video Interface Forum) mandates RTSP to be supported in its definition of Profiles G, S & T compliant devices.

- The RTSP source node requires you to specify an RTSP URL, along with credentials to enable an authenticated connection.

- IoT Hub message source:

- Messages can be sent from other modules, or apps running on the Edge device, or from the cloud. Such messages can be delivered (routed) to a named input on the video analyzer module.

Pipeline Processors:

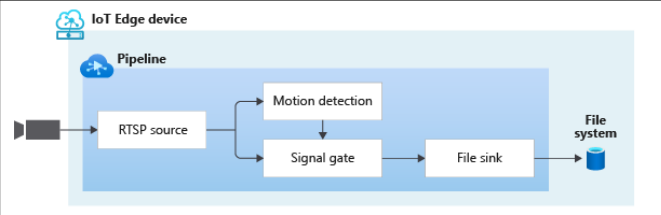

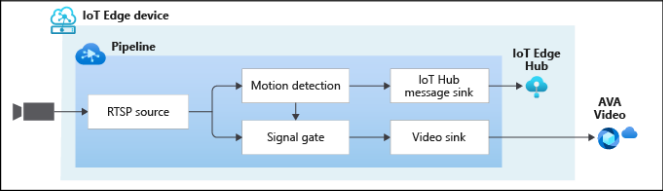

- Motion detection processor:

- Enables you to detect motion in live video.

- Examines incoming video frames and determines if there is movement in the video. If motion is detected, it passes on the video frame to the next node in the pipeline and emits an event.

- The motion detection processor node (in conjunction with other nodes) can be used to trigger recording of the incoming video when there is motion detected.

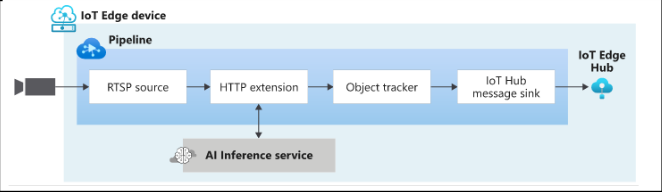

- HTTP extension processor:

- Enables you to extend the pipeline to your own custom IoT Edge module.

- This node takes decoded video frames as input, and relays such frames to an HTTP REST endpoint exposed by your module, where you can analyze the frame with an AI model and return inference results back.

- Node has a built-in image formatter for scaling and encoding of video frames before they are relayed to the HTTP endpoint.

- Manage encoder supports JPEG, PNG, BMP, and RAW formats.

- Enables extensibility scenarios using the HTTP extension protocol, to expose your own AI to a pipeline via an HTTP REST endpoint.

- Usage Scenarios:

- You want better interoperability with existing HTTP Inferencing systems.

- Low-performance data transfer is acceptable.

- You want to use a simple request-response interface to Video Analyzer.

- Used with Low frame rates usually due to performance reasons.

- gRPC extension processor:

- Takes decoded video frames as the input and relays such frames to a gRPC endpoint exposed by your module.

- gRPC extension protocol offers high content transfer performance using:

- shared memory or

- directly embedding the frame into the body of gRPC messages.

- Ideal for scenarios where performance and/or optimal resource utilization is a priority.

- A gRPC session is a single connection from the gRPC client to the gRPC server over the TCP/TLS port.

- In a single session: The client sends a media stream descriptor followed by video frames to the server as a protobuf message over the gRPC stream session.

- The server validates the stream descriptor, analyses the video frame, and returns inference results as a protobuf message.

- Usecase: use a gRPC extension processor node when you:

- Want to use a structured contract (for example, structured messages for requests and responses)

- Want to use Protocol Buffers (protobuf) as its underlying message interchange format for communication.

- You want to communicate with a gRPC server in a single stream session instead of the traditional request-response model needing a custom request handler to parse incoming requests and call the right implementation functions.

- Want low latency and high throughput communication between Video Analyzer and your module.

- Used with High frame rates usually for performance reasons.

- Cognitive Services extension processor:

- Enables you to extend the pipeline to Spatial Analysis IoT Edge module.

- Usage Scenarios:

- You want better interoperability with existing Spatial Analysis operations.

- Want to use all the benefits of gRPC protocol, accuracy, and performance of Microsoft built and supported AI.

- Analyze multiple camera feeds at low latency and high throughput.

- Signal gate processor:

- Enables you to conditionally forward media from one node to another.

- The signal gate processor node must be immediately followed by a video sink or file sink.

- Object tracker processor:

- Node comes in handy when you need to detect objects in every frame, but the edge device does not have the necessary compute power to be able to apply the AI model on every frame.

- One common use of the object tracker processor node is to detect when an object crosses a line.

- Line crossing processor:

- Enables you to detect when an object crosses a line defined by you.

- Also maintains a count of the number of objects that cross the line.

- This node must be used downstream of an object tracker processor node.

Pipeline Sinks:

- Video Sink:

- Node saves video and associated metadata to your Video Analyzer cloud resource.

- Video can be recorded continuously or discontinuously (based on events).

- Video sink node can cache video on the edge device if connectivity to cloud is lost and resume uploading when connectivity is restored.

- File Sink:

- Node writes video to a location on the local file system of the edge device.

- There can only be one file sink node in a pipeline, and it must be downstream from a signal gate processor node.

- This limits the duration of the output files to values specified in the signal gate processor node properties.

- You can also set the maximum size that the Video Analyzer edge module can use to cache data.

- IoT Hub message sink:

- Publish events to IoT Edge hub.

- The IoT Edge hub can be configured to route the data to other modules or apps on the edge device, or to IoT Hub in the cloud.

Pipeline Limitations:

- Only IP Cameras with RTSP support.

- Configure these cameras to use H.264 video and AAC audio.

- only supports RTSP with interleaved RTP streams. In this mode, RTP traffic is tunneled through the RTSP TCP connection.

- RTP traffic over UDP is not supported.

Pipeline Extensions:

- Extension plugin can make use of traditional image-processing techniques or computer vision AI models.

- Extension processor node relays video frames to the configured endpoint and acts as the interface to your extension.

- The connection can be made to a local or remote endpoint, and it can be secured by authentication and TLS encryption, if necessary.

- We have to provide a HTTP/gRPC Server, to which a Video Analyzer module will act as HTTP/gRPC client.

- HTTP extension processor

- gRPC extension processor

- Cognitive Services extension processor

- Custom Inferencing model:

- Run inference models of your choice on any available inference runtime, such as ONNX, TensorFlow, PyTorch, or others in your own docker container.

- Invoked via the HTTP/gRPC/Cognitive extension processor.

- The Video Analyzer cloud service enables you to play back the video and video analytics from such workflows.

Use-cases:

Detect motion and record video on edge devices

Detect motion, record video to Video Analyzer in the cloud

Track objects in a live video